The Difference Between a Data Lake and a Data Warehouse

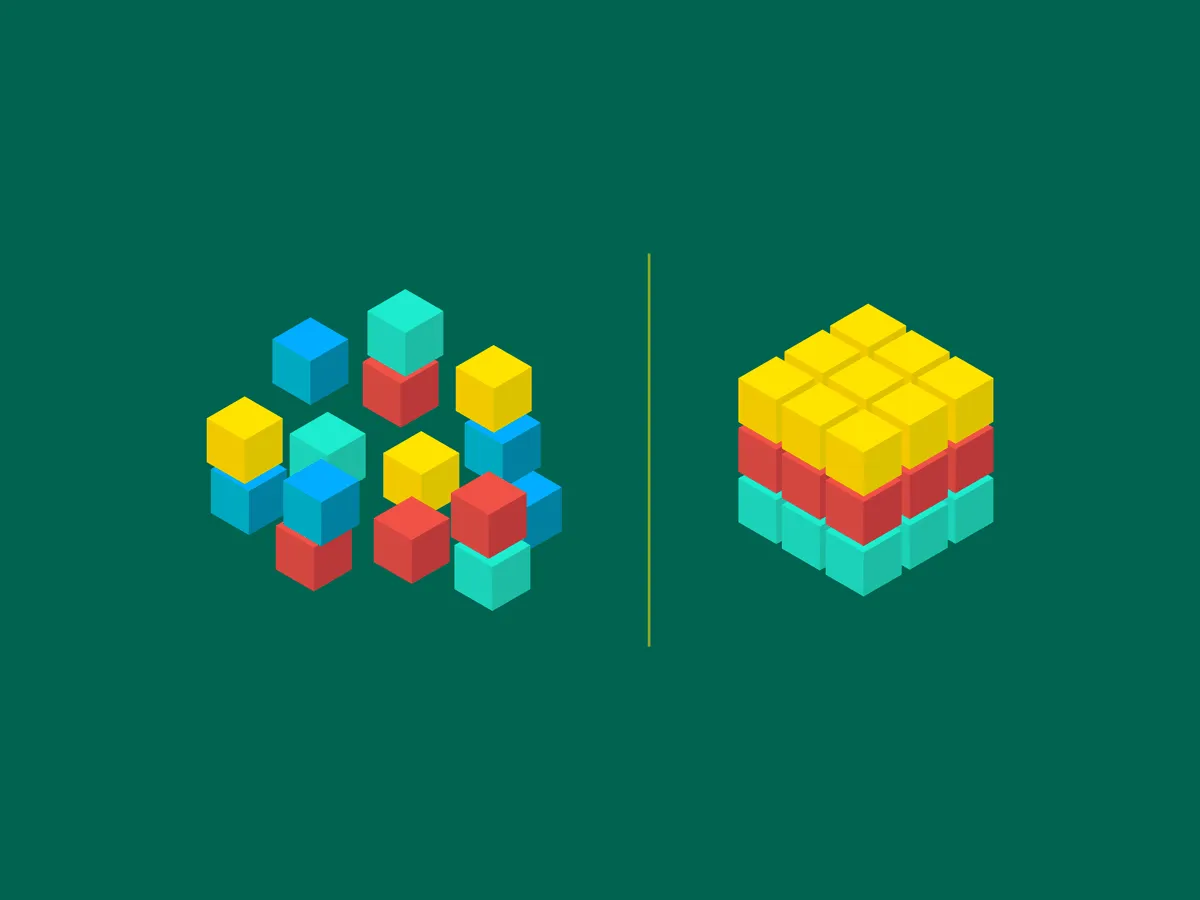

A data lake and a data warehouse are both systems for storing and managing large amounts of data, but they have some key differences. A data lake is a repository that is capable of storing raw, unstructured data from a variety of sources. It is designed to be flexible and scalable, without requiring data to be structured in a specific way. They are most often used by researchers and analysts to tease out insights from disparate data sets.

In contrast, a data warehouse is a structured repository for storing and managing sanitized data. Data in a data warehouse is typically organized into tables and schemas. Like a data lake, a data warehouse can serve as the corpus for in-depth analysis. But the structured nature of the warehouse also makes it well suited to deliver data into a target application.

Another key difference between a data lake and a data warehouse is the way that data is accessed and used. In a data lake, data is typically used for ad-hoc analysis and exploration. In a data warehouse, data is typically accessed through pre-defined reports and dashboards or via queries from an application.

Data travels in and out of the data warehouse across “pipelines”. However, unlike a pipeline for oil or gas, these are not physical structures. Instead, a data pipeline is a set of processes that move data from one place to another.

The process that gets data into a warehouse is known as ETL, which stands for extracting, transforming, and loading (“ETL”) data from various sources. This involves several steps:

- Extract: The first step in the pipeline is to extract data from the source. This may involve, accessing an on-premises file, querying a database, or scraping web pages to gather the data.

- Transform: Once the data has been extracted, it is typically transformed into a format that is suitable for loading into the data warehouse. This may involve cleaning the data, removing duplicates, and applying any necessary transformations or calculations.

- Load: The final step in the pipeline is to load the data into the data warehouse. This may involve creating new tables, or appending the data to existing tables.

Data warehouse pipelines are typically designed to automate the ETL process so that it runs on a regular basis, allowing the data warehouse to remain up-to-date. In addition, data warehouse pipelines often include error-checking and quality-control measures to ensure that the data is accurate and consistent.

Getting data into the warehouse is just the beginning. For application developers, the goal is, of course, to retrieve the data from the warehouse then render it in a target application, often dynamically at the click or tap of a user.

The process of extracting data from a data warehouse typically involves running a series of queries to select the specific data that needs to be extracted. This data is then transformed, if necessary, to fit the format and structure of the target application. Finally, the transformed data is loaded into the application, typically through a batch process or real-time stream.

In sum, the main core distinction between a data lake and a data warehouse is the way each platform handles data storage and access. Both systems are valuable tools for managing big data, but they serve different purposes based on the needs of the end user. Working with Real Estate API offers the benefits of both.

Because we have amassed an enormous body of property data (hundreds of attributes on more than 150M properties nationwide), clients are able to perform the same intense analysis that is typically done through a data lake. This includes using our data sets to train AI and machine learning models. At the same time, we have structured the nation’s property data into a schema that is optimized for delivering data into consumer applications with lightning speed. This ‘Lake House’ approach is novel in the industry, and is one of the many reasons engineers looking to build the next generation of real estate apps turn to REAPI.